Joint Learning from Earth Observation and OpenStreetMap Data to Get Faster Better Semantic Maps

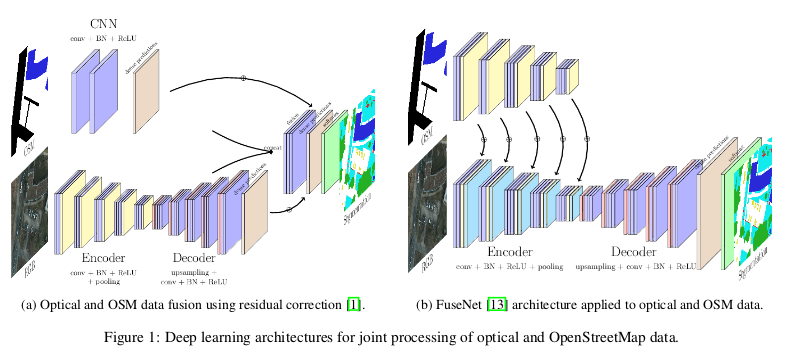

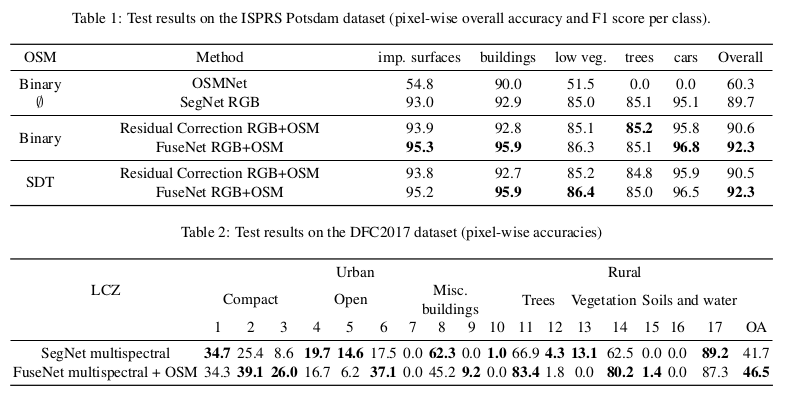

They propose to use different cases and deep network architectures to leverage OpenStreetMap data for semantic labeling of aerial and satellite images. Especially, the fusion-based architectures and coarse-to-fine segmentation to include the OpenStreetMap layer into convolutional networks. They used on two public datasets: ISPRS Potsdam and DFC2017. In the end, they show that OpenStreetMap data can efficiently be integrated into the vision-based deep learning models and that it significantly improves both the accuracy performance and the convergence speed of the networks.

Segmentation Network

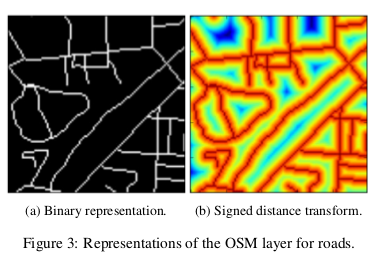

Binary Vs. Signed Distance Transform (SDT)

- Binary: For each raster, they have an associated channel in the tensor which is a binary map denoting the presence of the raster class in the specified pixel.

- SDT: For each raster associated channel corresponding to the distance transform d, with d > 0 if the pixel is inside the class and d < 0 if not.

Experiments

Problems

- For segmentation, we can only use roads, buildings and vegetation landuse OSM class.

- They use more recent OSM data than the ISPRS dataset (some buildings are on OSM and not on Potsdam).

- Using different channel with the OSM data can quickly bust all the memory.