On the importance of single directions for generalization

Is a cat neuron a good thing? A paper from Deep Mind that explores the relationship between class selectivity and generalization.

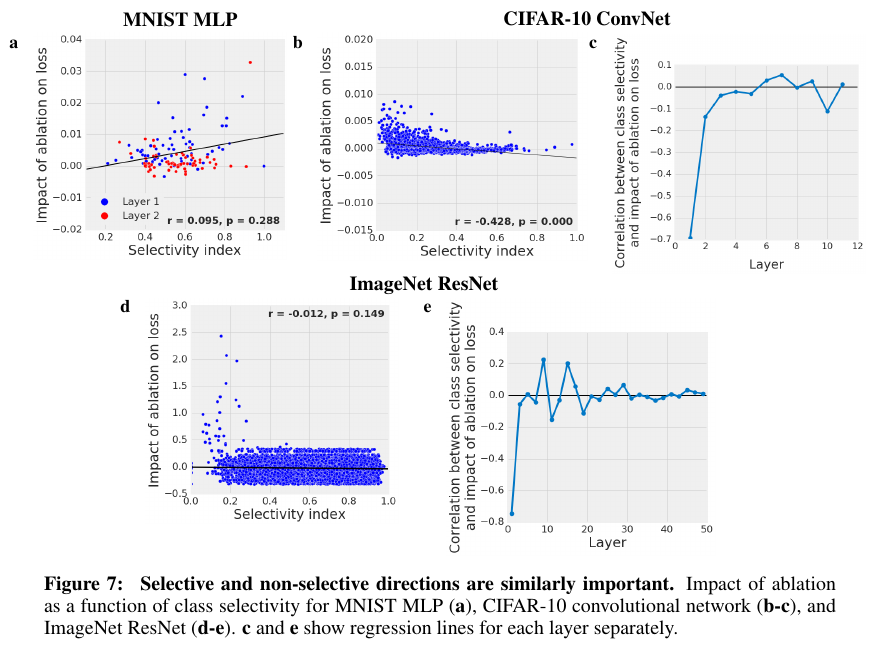

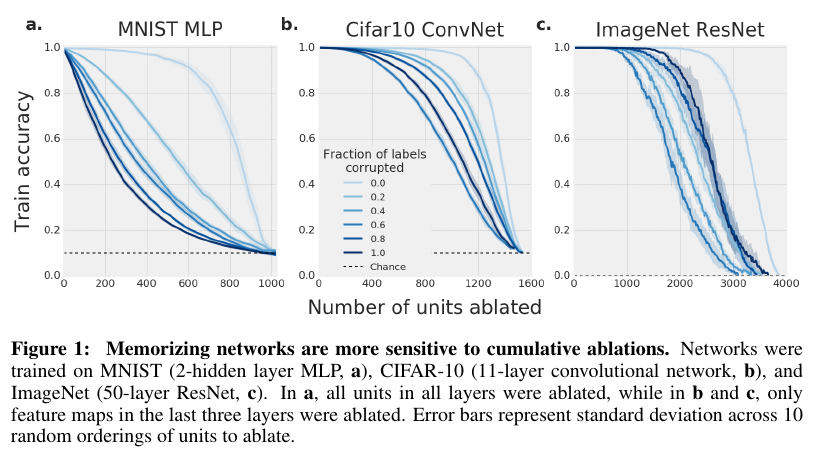

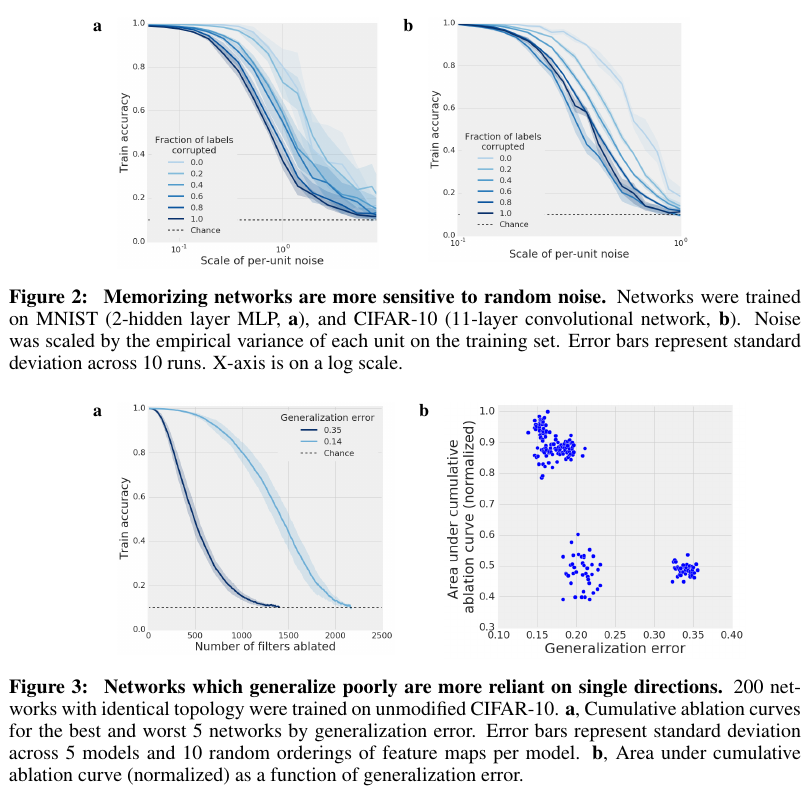

In a layer that has high class selectivity, a neuron will fire up for a class while other neurons are off. The activation vector is thus pointing in a single direction. In networks that rely on single directions, random ablation of neurons will greatly affect the network’s output. Thus, the authors use random ablation as their experimentation method for testing their hypotheses.

Generalization and single directions

- Networks that rely more on single directions tend to have lower generalization capability

- Introducing noise in labels forces the network to memorize

Single directions for model selection

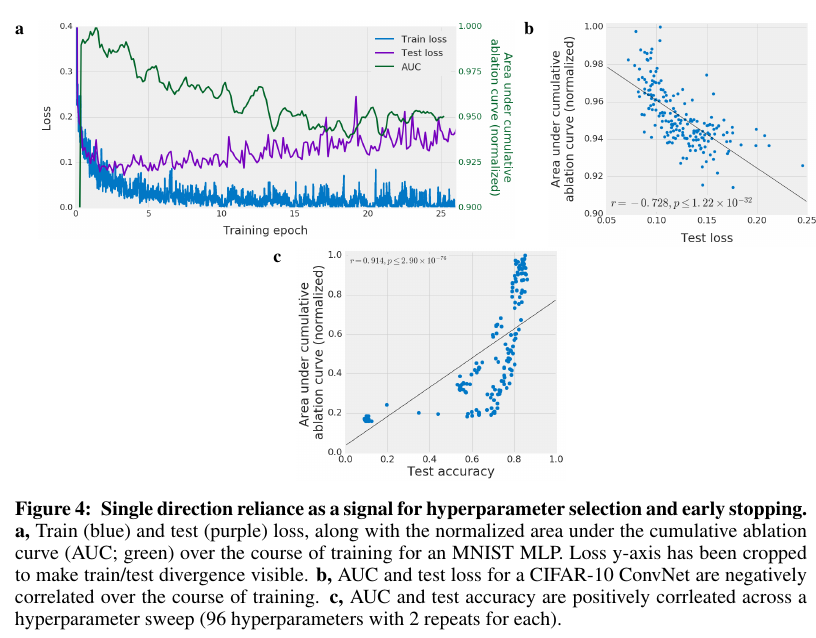

- Single direction reliance is a good indicator of generalization

- Could be used instead of a validation/test set

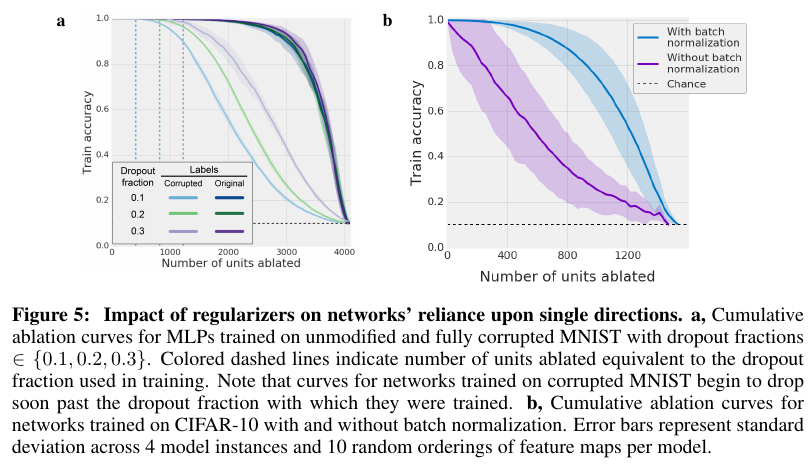

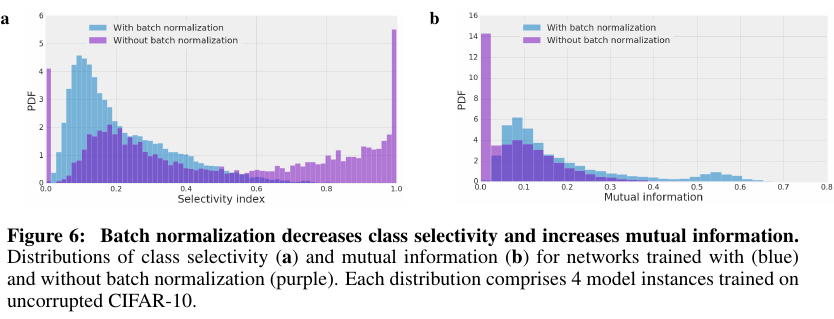

Dropout and BatchNorm

- BatchNorm discourages reliance on single directions

- Dropout does not help beyond the dropout proportion used while training

Does class selectivity make a good neuron?

- Class selectivity of single directions is uncorrelated to their importance to the network’s output

- We should not analyse DNNs by looking for cat neurons or other class selective neurons