Deep Pyramidal Residual Networks

Paper:

This paper has two main contributions, a pyramidal architecture that concentrates the feature map dimension, they change slightly the usual resnet block by adding a zero-padded shortcut, and also try different layer combinations inside the block.

Model

This model is a regular resnet but the number of feature maps in each layer are calculted by a formula.

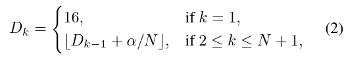

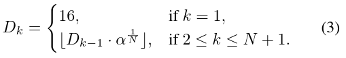

With these equations, the number of feature maps in the network is really different from a regular resnet as shown in Figure 2.

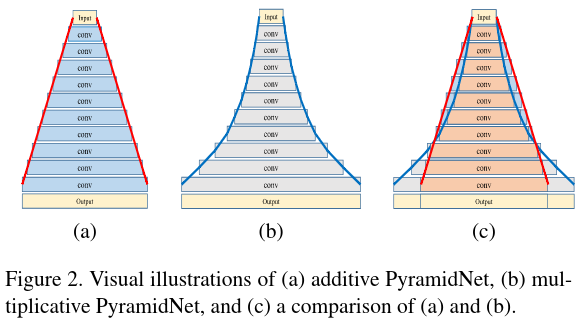

The new shortcut included in the residual block include a zero padding of the features and can be seen as a new residual path.

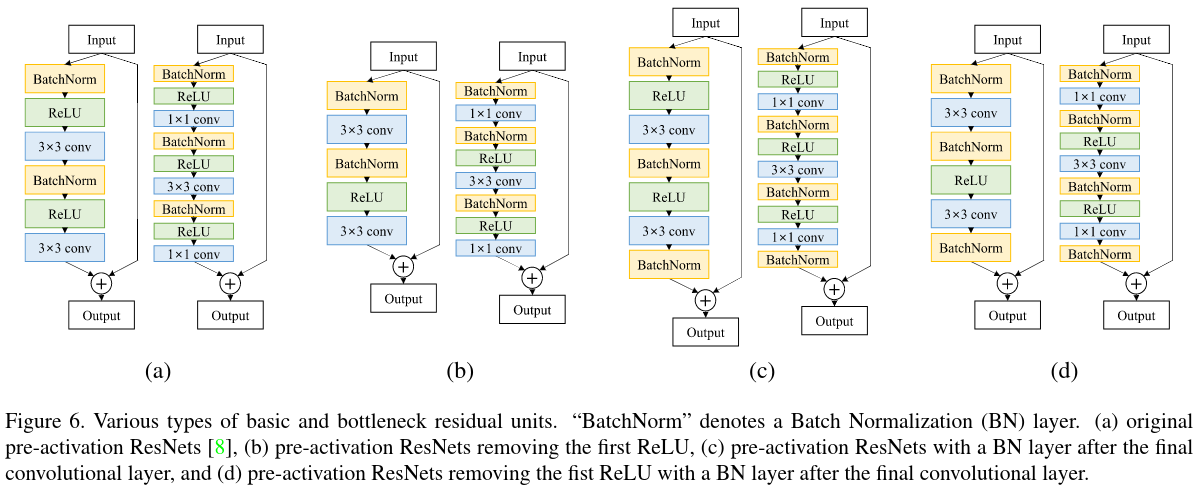

Finally, they found empirically some impacts of the relu activation and batch normalization given their position in the network.

Results

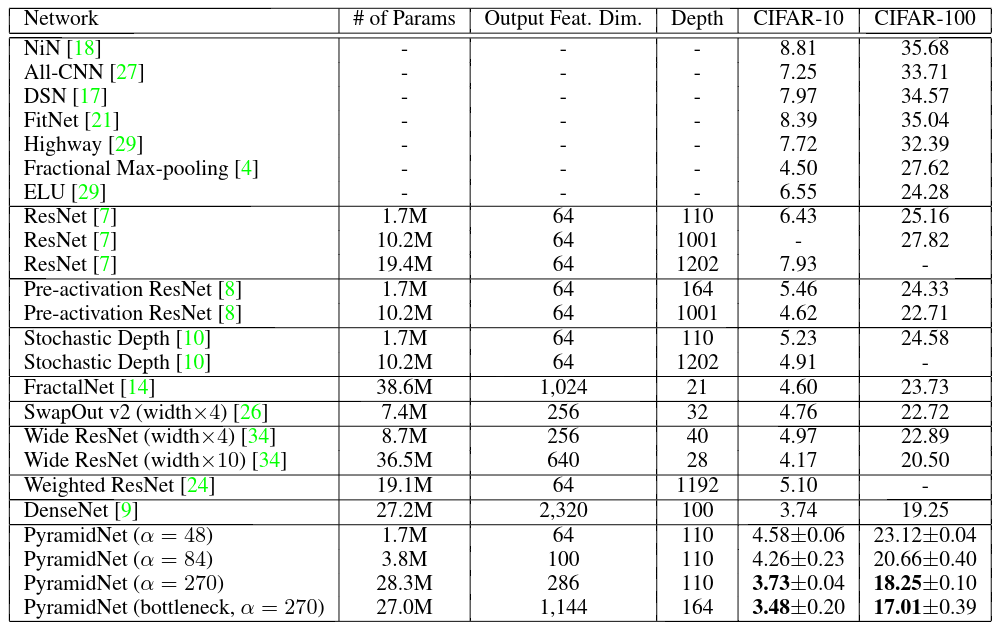

They report results on three datasets namely, CIFAR-10, CIFAR-100, and ImageNet.

CIFAR-10 & CIFAR-100

ImageNet