Universal adversarial perturbations

Summary

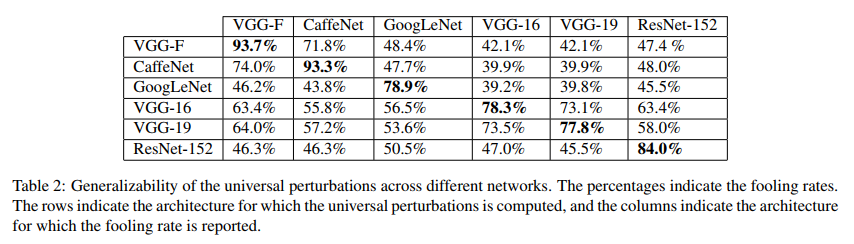

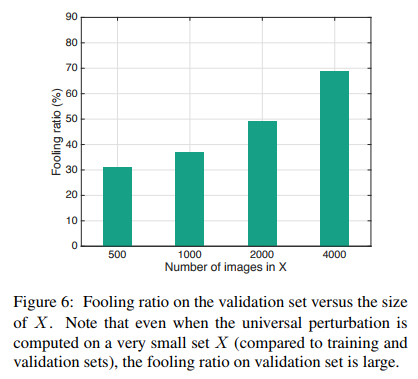

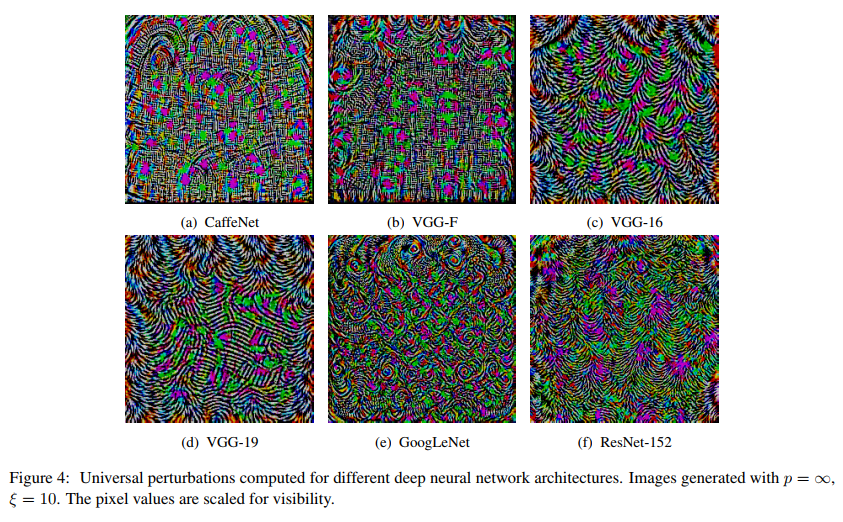

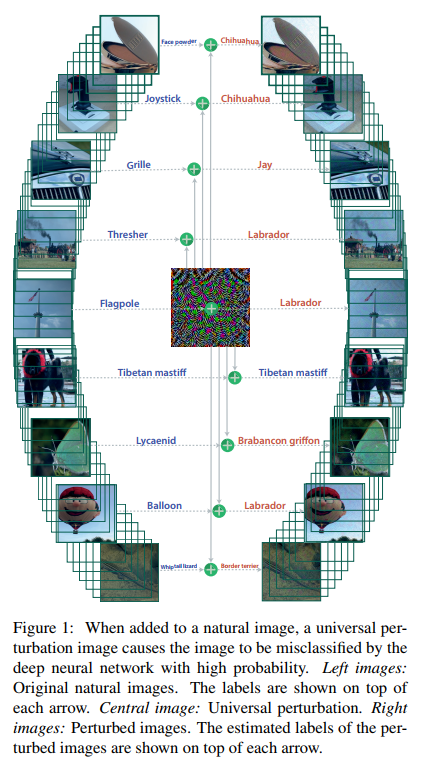

The authors propose a systematic algorithm for computing universal perturbations to fool image classification networks. The perturbations are shown to work very well across neural networks.

The proposed algorithm has two parameters:

- The norm of the perturbation to be added to images

- The desired fooling rate

The idea is to iteratively go over images and build the “universal perturbation” \(v\) by computing the minimal modification to \(v\) that causes each image to be misclassified.

Experiments and Results

Dataset: ILSVRC 2012 validation set (50,000 images)

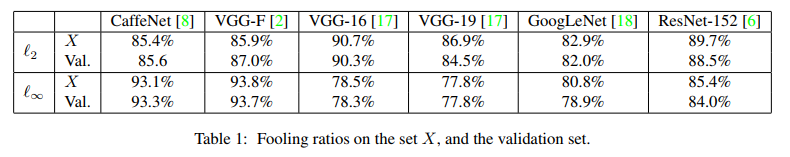

Note that in Table 1, “X” is the training set on which the universal perturbation is computed.