Evolving Neural Networks Through Augmenting Topologies

This paper presents a method called “NeuroEvolution of Augmenting Topologies (NEAT)” to learn the weights AND topology of a neural network through a genetic algorithm and without back propagation. As they mention, their method:

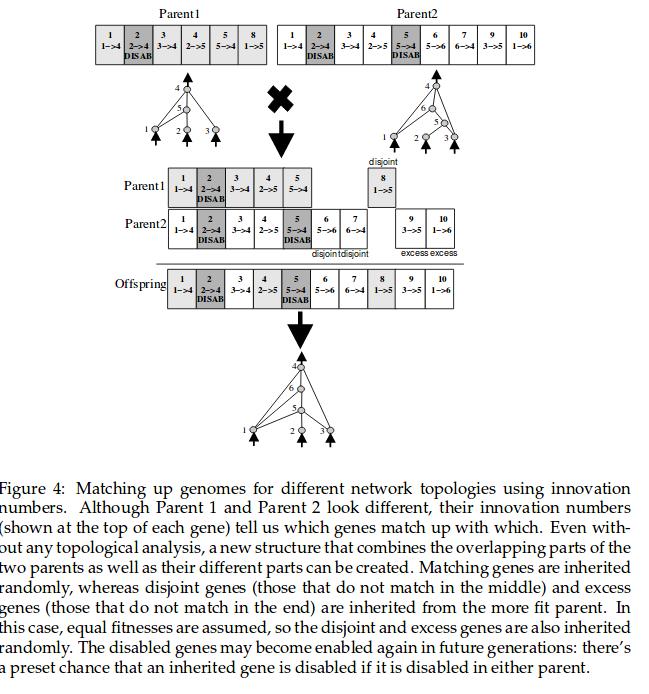

is based on applying three key techniques: tracking genes with history markers to allow crossover among topologies, applying speciation (the evolution of species) to preserve innovations, and developing topologies incrementally from simple initial structures

The main inconvenient of this approach is that it only applies to very small neural networks (with typically less than 10 neurons) as thousands of neural networks are being tested before convergence.

They even have a wikipedia page!